Tokenization

How LLM's break text into tokens and how to use them effectively.

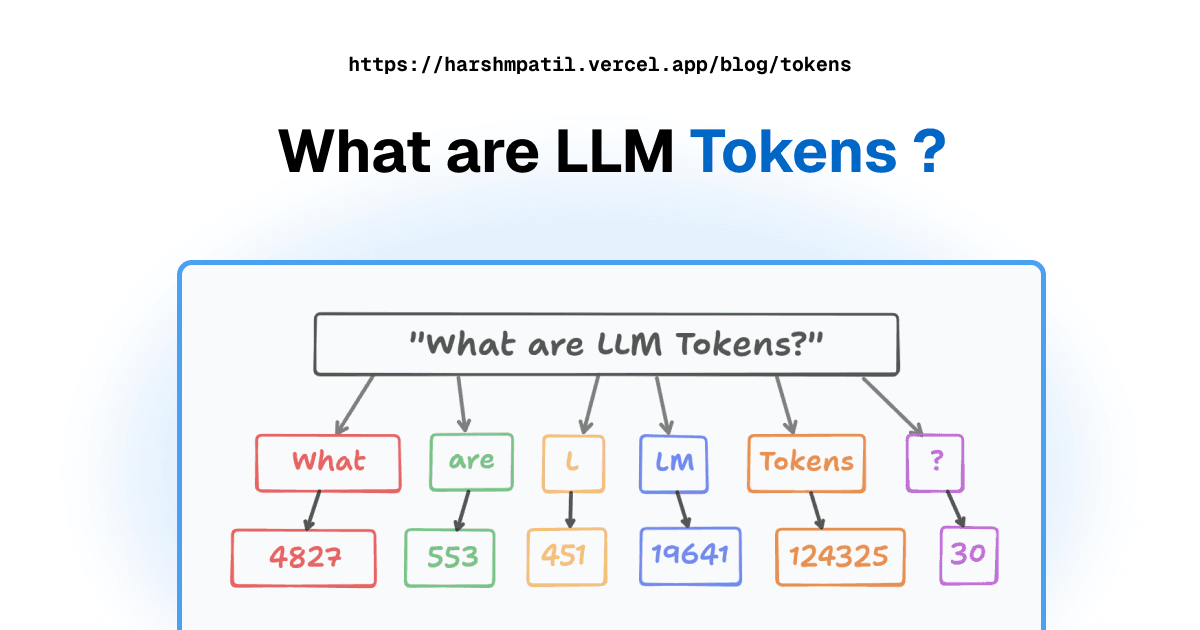

Large Language Models (LLMs) process text by breaking it down into smaller units called tokens.

We are charged per million tokens input and output by most LLM providers. The context length is also measured in tokens. So generally the lesser the tokens the better.

Not completed yet, stay tuned! Try the interaractive tokenizer in the meantime.

Input

Output tokens (text)

Output tokens (token Ids)

[ ]

0 Tokens0 Characters0 Words